OUR THOUGHTSAI

Mastering Model Context Protocol (MCP): how to give your AI Code Assistant tools to use

Posted by Davin Ryan . Jun 30.25

We are regularly reminded that large language models (LLMs) will revolutionise how we work, automate complex tasks and enhance productivity across industries.

These models have transformative potential, but agentic AI and Model Context Protocol (MCP) servers may be the most revolutionary capabilities for product engineering so far.

While the Model Context Protocol is still immature, it’s evolving fast. Here, we explore why it matters and look at a simple example of MCP server implementation.

Why MCP servers matter for product engineering

The fundamental value proposition of Model Context Protocol servers lies in their ability to make LLMs significantly more capable with minimal additional engineering effort. Model Context Protocol achieves this by making it easy to connect LLMs to:

- Tools – powerful functions that allow LLMs to interact with external systems, perform computations and take actions in the real world

- Resources – content that can be used to provide context to an LLM so that you don’t have to repeat yourself or describe everything you are doing

- Prompts – powerful workflows that can be provided to LLMs to standardise and share common interactions in order to achieve an outcome

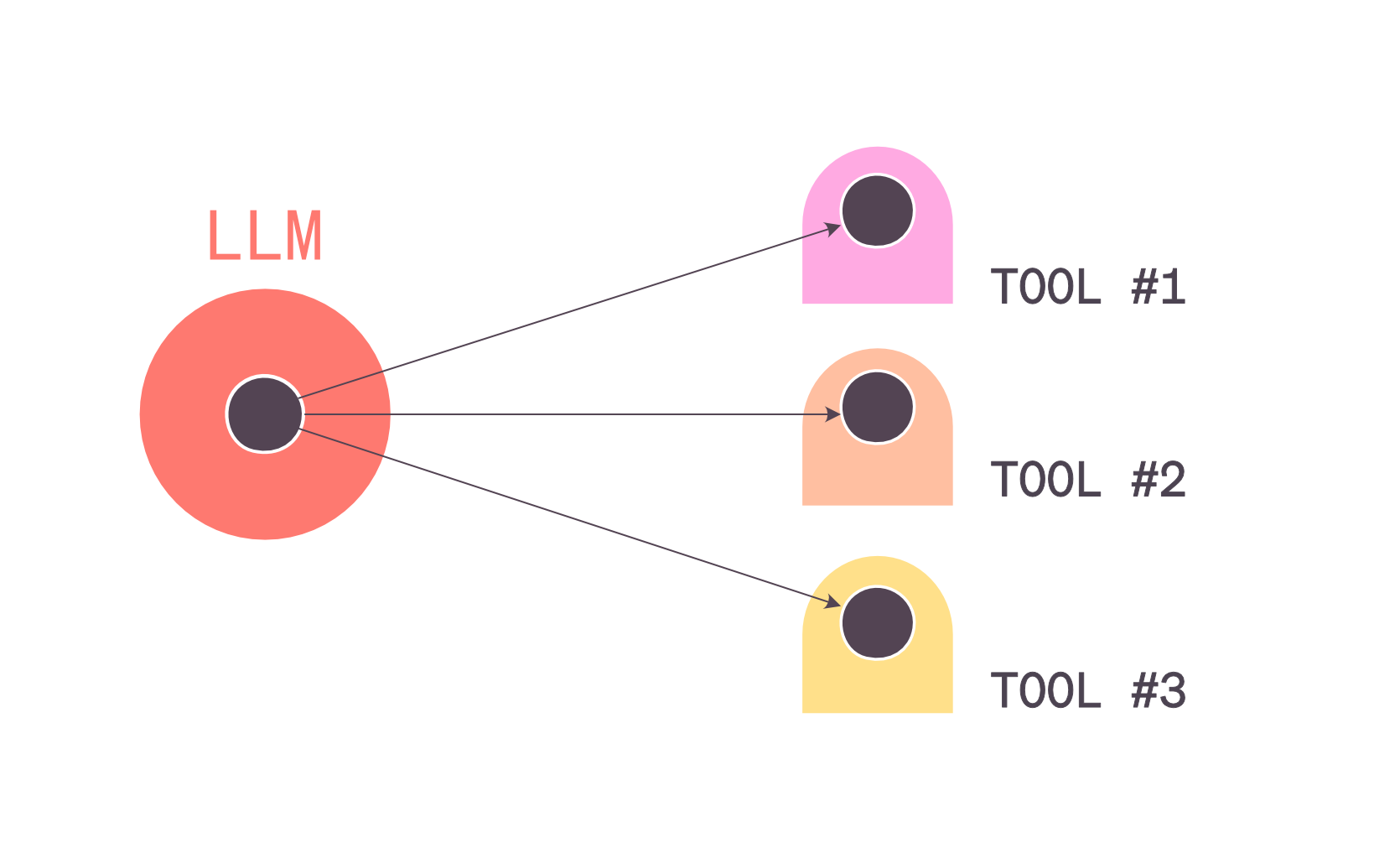

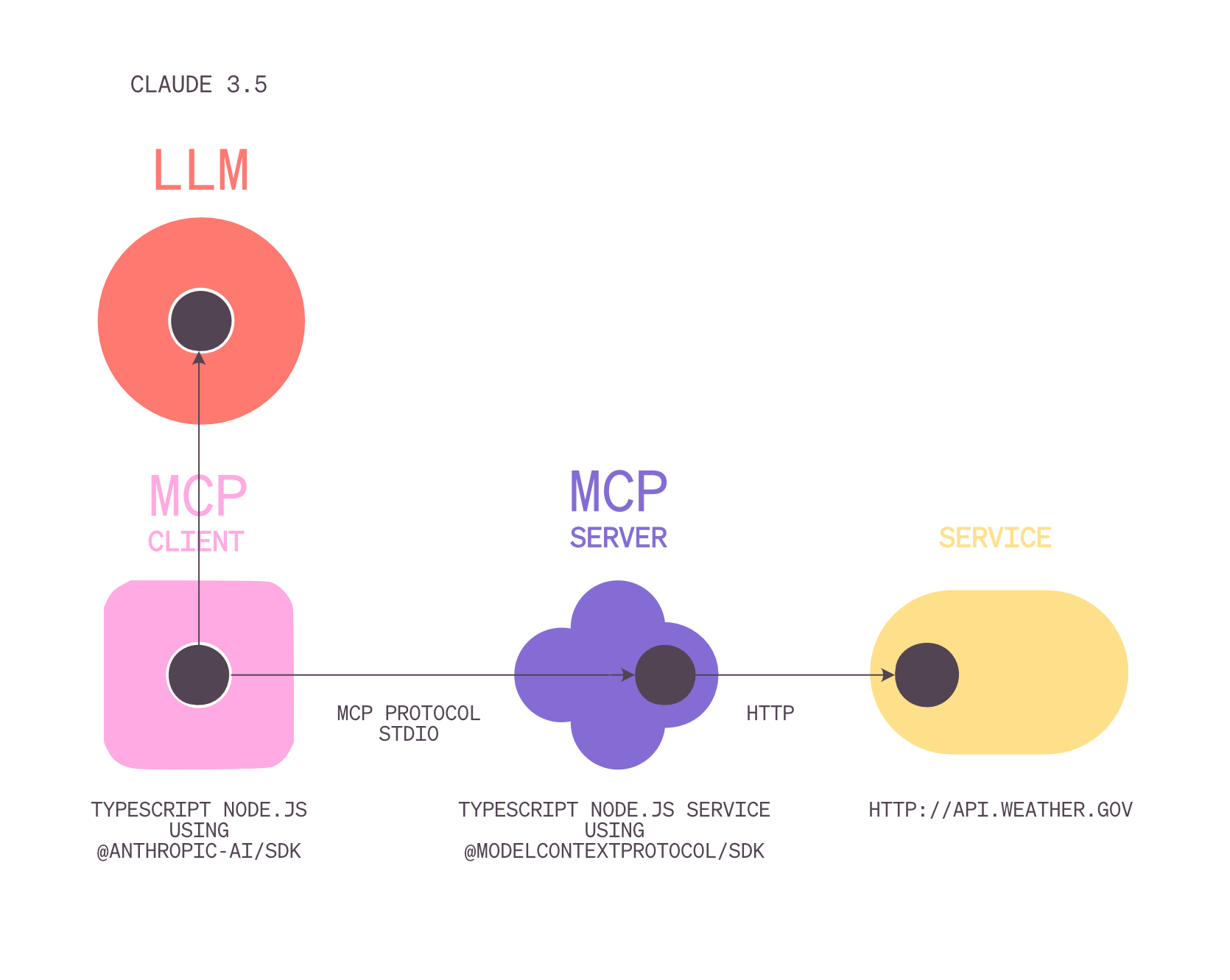

This represents a dramatic improvement over previous approaches, where connecting LLMs to these external tools and resources required extensive custom integration work to support different LLMs and was almost impossible to scale. Below: LLMs currently have direct custom integration points with external/internal tools

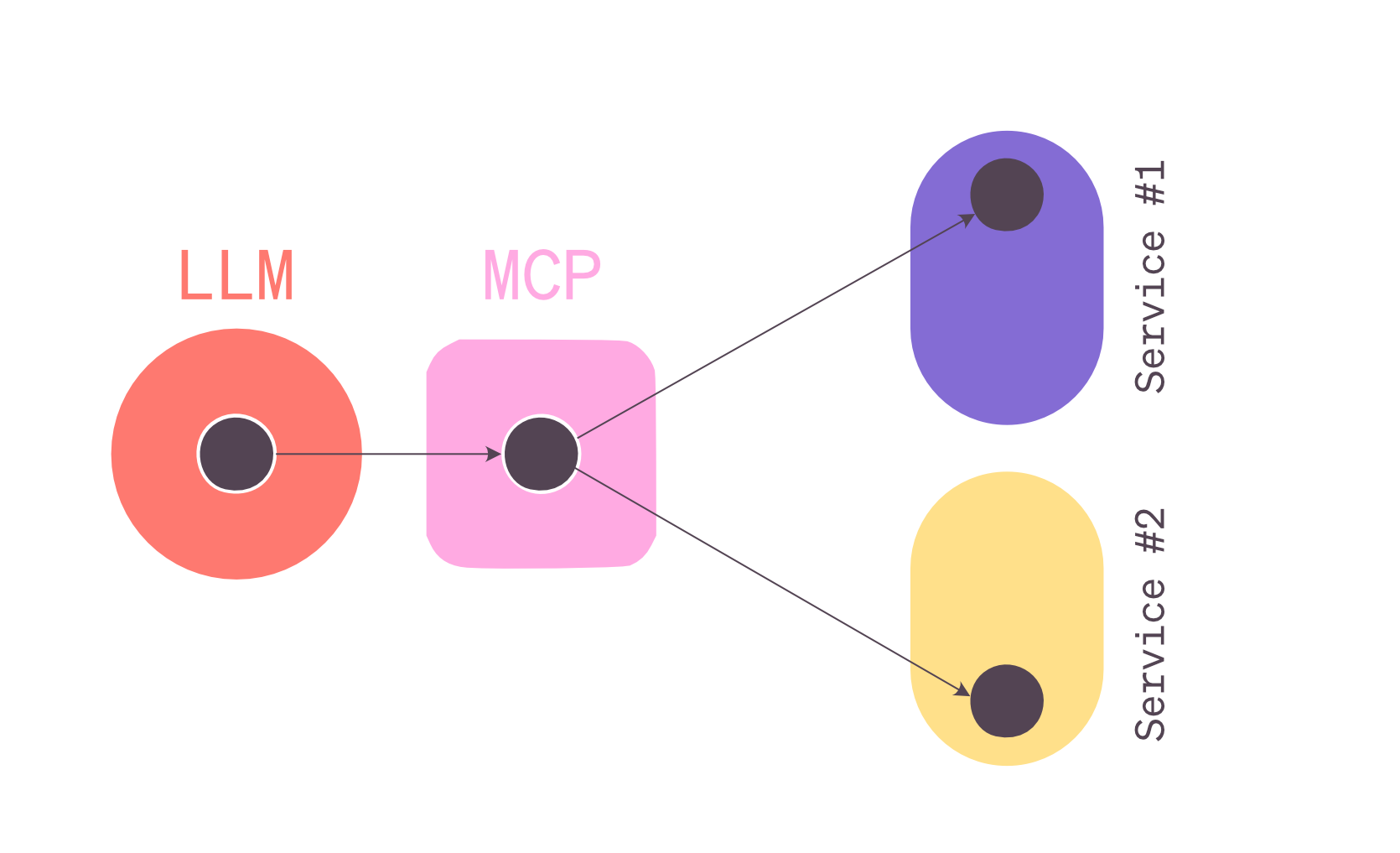

The Model Context Protocol design addresses one of the primary obstacles to LLM adoption in enterprise environments: the complexity of maintaining multiple tool integrations. Rather than managing dozens of custom connections that break unpredictably when external APIs change, organisations can rely on service providers to keep their own MCP servers while using a stable, standardised interface for communication. Below: MCP server providing a common interface to all services/tools

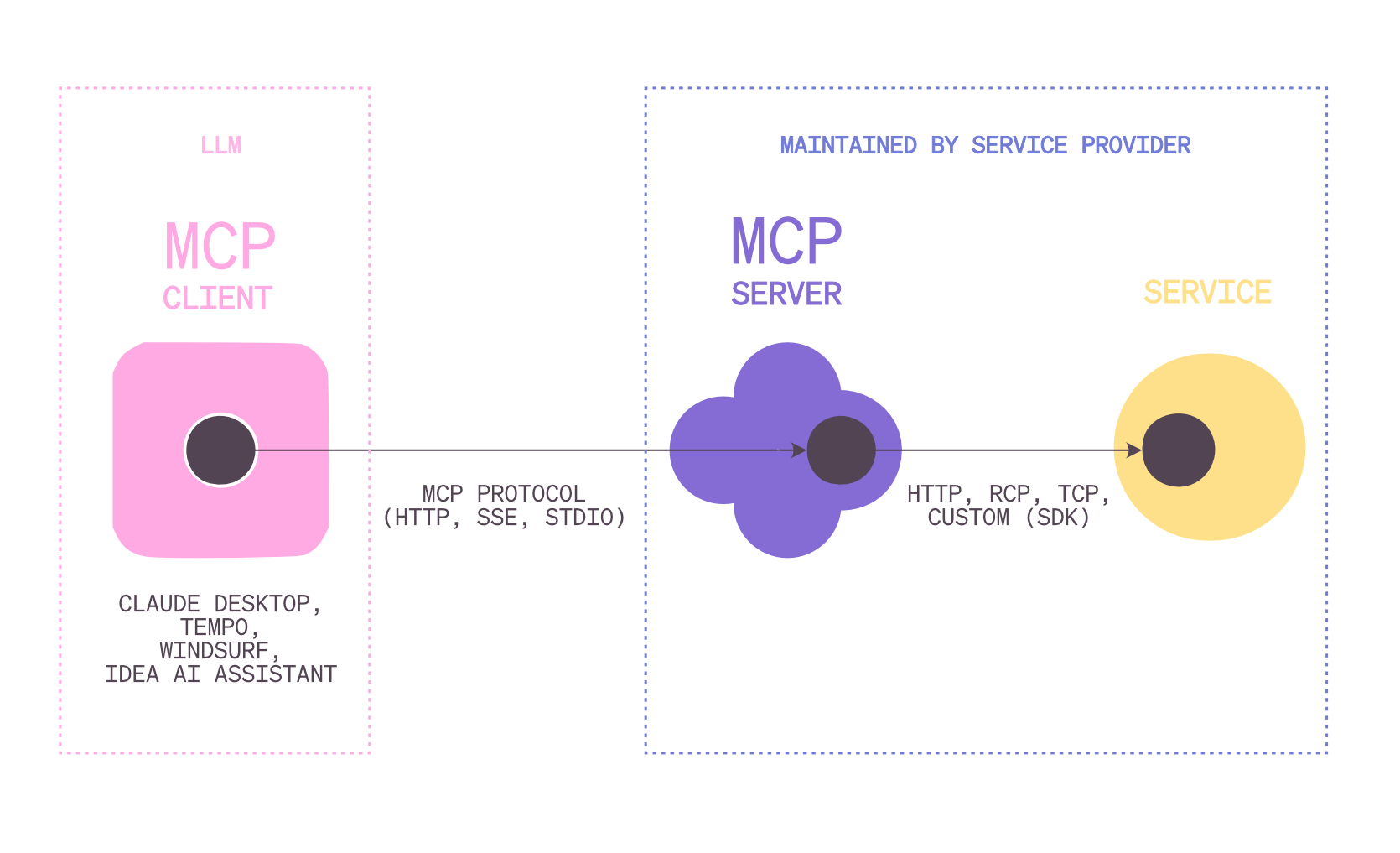

Above: MCP server architecture and ownership boundaries. Organisations can now connect their AI assistants to their own internal systems, databases and external APIs using a standardised MCP protocol that eliminates the need for bespoke integration solutions. This accessibility means businesses can experiment with AI-enhanced workflows without committing to major engineering projects.

Perhaps most importantly, MCP server implementation enables developers to stay within the context of ongoing work rather than forcing users to switch between multiple applications and interfaces. For developers, this means being able to check build states, analyse GitHub repositories and access deployment pipelines without leaving their development environment, reducing cognitive load and context switching. This can represent a significant productivity improvement over traditional workflows.

Understanding how to build an MCP server helps one understand how they work and how they can be used effectively.

Building your first MCP server

This example is largely based on the great work done by Anthropic.

In this example, we’ll use a custom Node.js client that calls an LLM with an Node.js MCP server that can forecast the weather for that LLM by calling http://api.weather.gov as seen below: Simple example of architecture and technology used

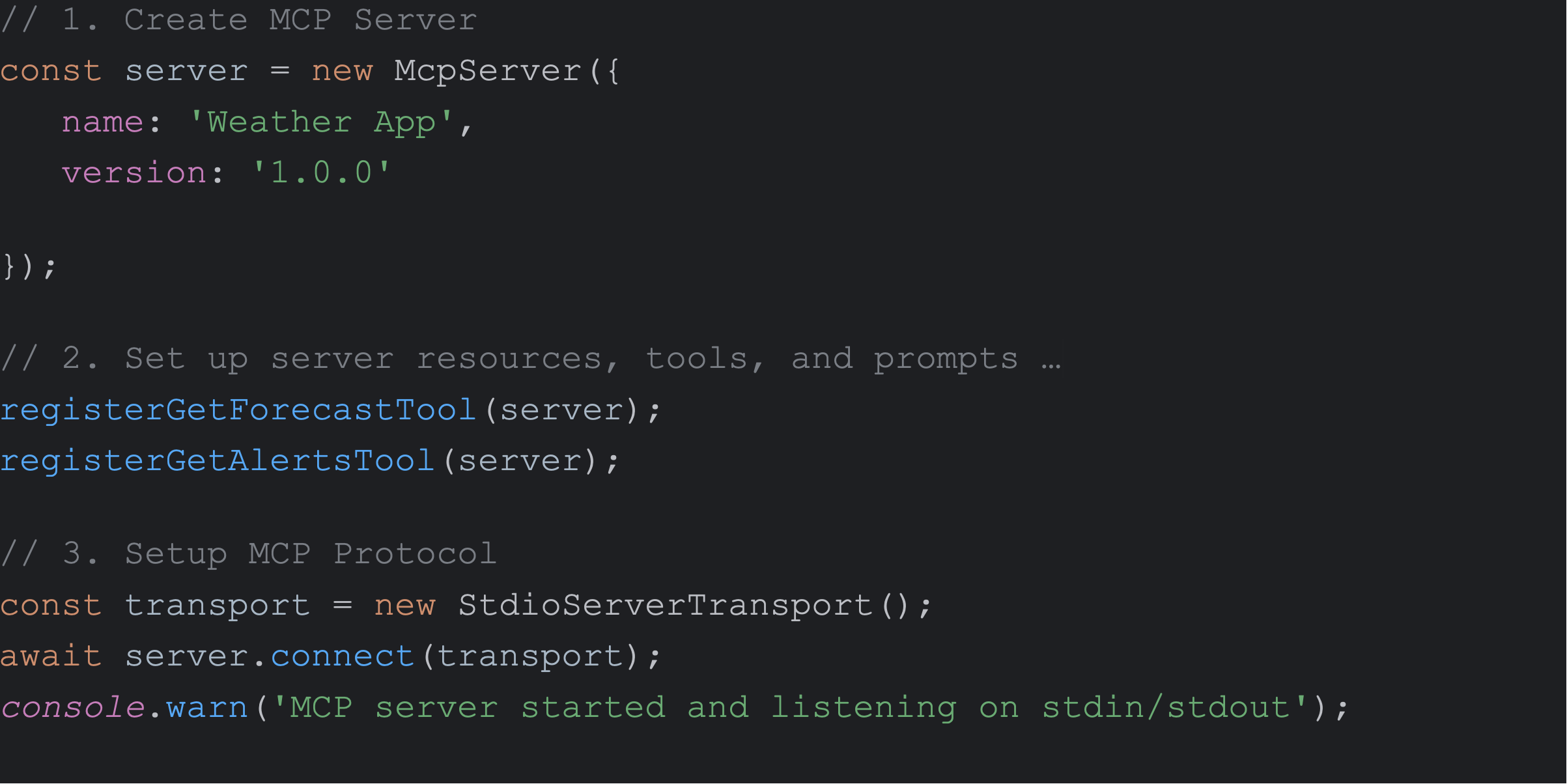

The MCP architecture is deliberately simple in this example. At its core, an MCP server consists of three primary components: the server creation using an appropriate SDK, tool registration that defines available functions and a serving mechanism that handles communication protocols.

The protocol supports three different communication methods, each optimised for specific use cases. Standard input/output provides the simplest option for local MCP servers that don’t require network communication overhead. Though now deprecated, server-sent events (SSE) initially enabled real-time communication for remote servers. The current preferred approach uses streaming HTTP connections that provide the reliability and scalability needed for production environments.

The flexibility of this architecture becomes apparent when examining how the same core logic can support multiple communication protocols with minimal code changes. Creating a local MCP server using standard input/output requires only basic server instantiation and tool registration. Converting this to a remote server using HTTP streaming involves adding an HTTP server like the popular Node.js library ‘Express’ while maintaining identical tool registration logic.

Adding tools

The actual process of building an MCP server demonstrates how straightforward the implementation can be when you understand the essential components. Consider a weather service integration that provides forecast data and alert information through a standardised interface.

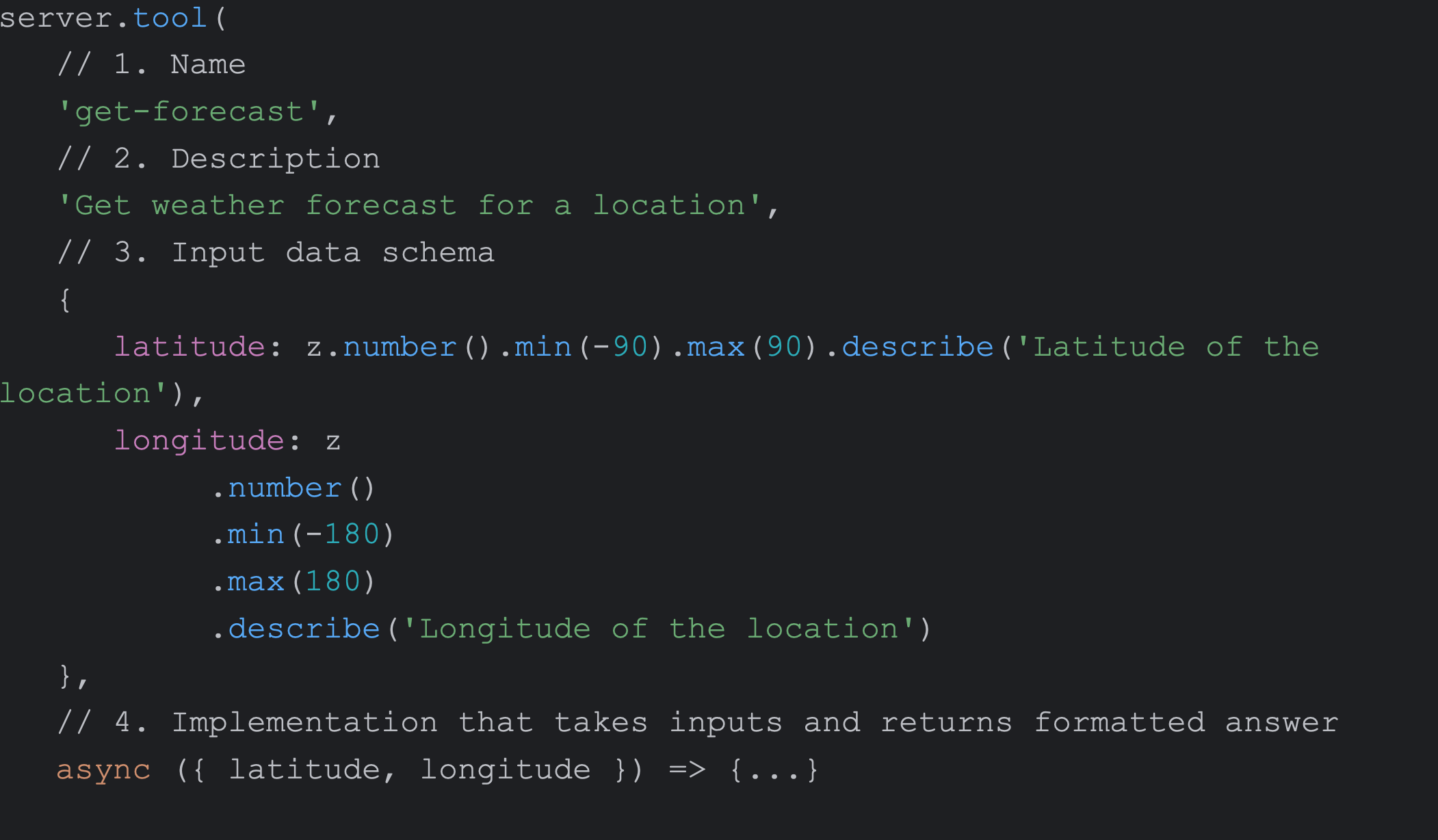

Tool registration represents the core of MCP server functionality, where developers define the specific functions that LLMs can invoke. Each tool requires four essential elements: a descriptive name that LLMs can reference, a detailed description that helps the AI understand when to use this function, a schema definition that specifies required parameters and the actual implementation function that performs the work.

The description component deserves particular attention because it directly influences how effectively LLMs can select and use your tools. A well-crafted description provides sufficient context for the AI to understand what the function does, when it should be used and what types of queries it can address. This natural language guidance becomes critical when LLMs encounter ambiguous requests that multiple available tools could potentially handle.

Parameter schemas define the data structure your function expects to receive when invoked by an LLM. These schemas serve validation and documentation purposes, ensuring the AI provides correctly formatted input while helping future developers understand the function’s requirements.

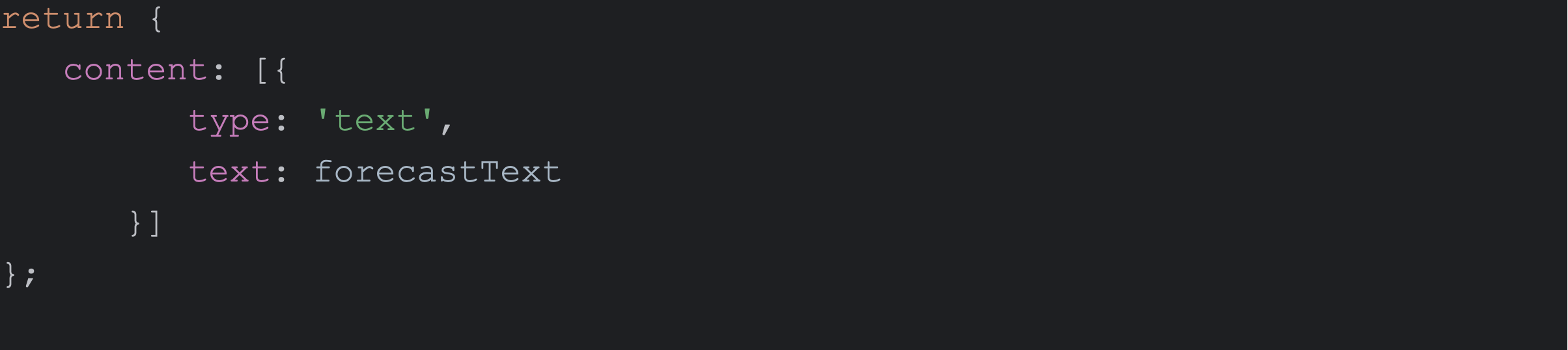

The implementation function handles the business logic, whether that involves making API calls, querying databases or performing calculations. The key requirement is that responses follow MCP’s standardised format, including a content type specification and the actual response data. This consistency enables LLMs to process responses predictably regardless of the underlying implementation complexity.

Taking control of output

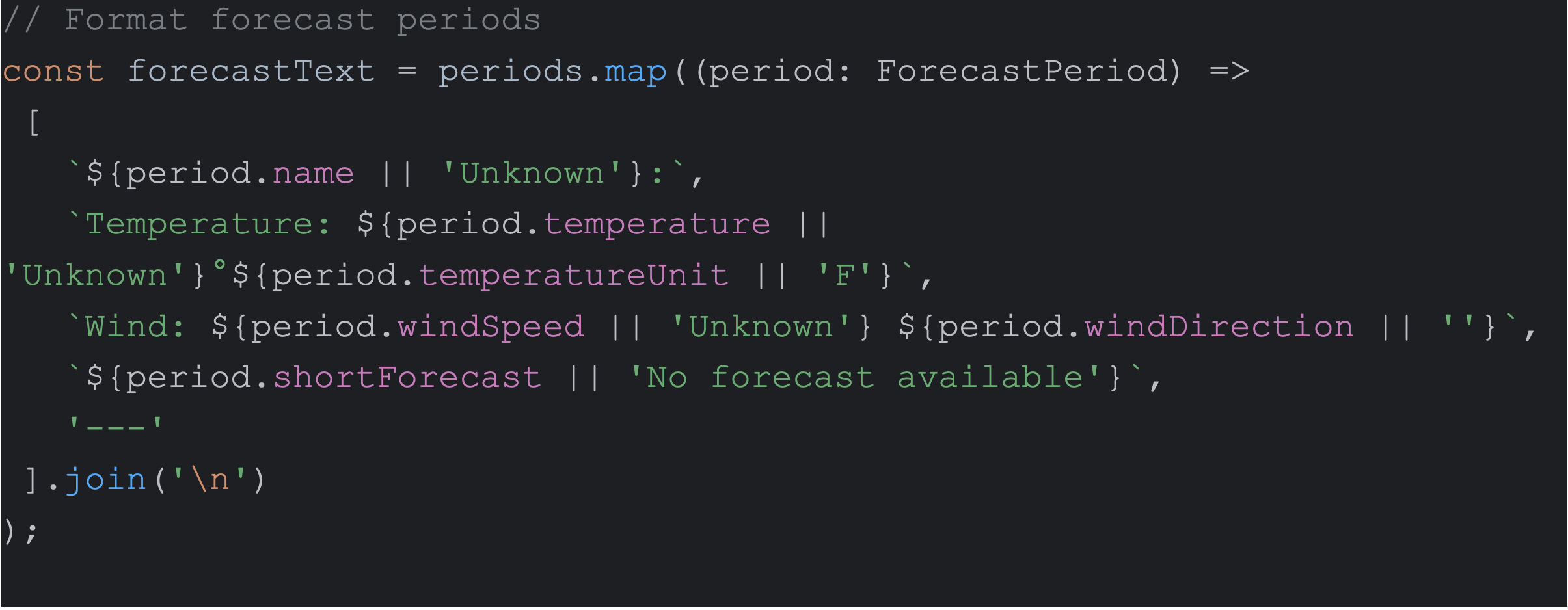

Another powerful aspect of MCP server development is the complete control you gain over response formatting. Unlike other AI integration approaches where output formatting depends on the LLM’s interpretation capabilities, MCP servers allow developers to structure responses exactly as needed for their specific use cases.

The formatting responsibility is split between the MCP server and the LLM in an interesting way. Developers control the precise structure and content of data returned from their functions, while LLMs interpret natural language queries and select appropriate tools. This division means you can ensure consistent, properly formatted output for business-critical tasks while leveraging the LLM’s natural language understanding for user interactions.

Response formatting becomes particularly important when dealing with complex data structures or domain-specific information that requires precise presentation. A weather forecast may include temperature ranges, precipitation probabilities and alert information that needs to be presented in a specific format for maximum usefulness. The MCP server can ensure consistency in formatting, regardless of how users phrase their requests.

The standardised response format requires specifying a content type and providing the actual response text. This simplicity makes integrating with existing APIs and data sources straightforward while maintaining the flexibility to present information in whatever format best serves your use case. Whether you need structured data, formatted reports or simple status messages, the response formatting approach remains consistent.

Current limitations and production readiness

Despite its potential, MCP implementation currently faces several significant limitations that organisations should understand before committing to production deployments. The MCP protocol specification continues evolving rapidly as it is still in a draft state eg. fundamental changes like the deprecation of server-sent events in favour of HTTP streaming are occurring.

The lack of finalised standards creates practical challenges for production environments. Many service providers currently distribute MCP servers as Docker images or binary downloads rather than offering them as managed services. This approach requires organisations to handle local installation, configuration and maintenance rather than consuming these capabilities as external services.

Protocol compatibility issues frequently arise between different LLM clients and MCP server implementations. Developers often discover that specific communication protocols work with some AI assistants but fail with others, forcing them to maintain multiple implementation approaches or limit their choice of LLM platforms. These compatibility challenges reflect the protocol’s early-stage maturity rather than fundamental design flaws.

Permission and authorisation systems represent the most significant gap in current MCP implementations. As LLMs gain more powerful capabilities through MCP integrations, organisations need robust mechanisms to control what actions these systems can perform. The current deployments often lack sophisticated permission models, creating potential security and operational risks that may limit enterprise adoption.

Development environment vs production deployment

The distinction between development and production readiness becomes crucial when evaluating MCP server implementations. While the technology isn’t ready for high-stakes production deployments, it offers significant value in development environments where the risk exposure remains manageable.

Development contexts provide ideal proving grounds for MCP server experimentation because failures have a limited impact, while successes can demonstrate clear productivity improvements. Developers can safely experiment with GitHub integrations, file system access and development tool connections without risking business-critical operations. This experimentation phase enables organisations to build expertise and identify valuable use cases before the technology reaches production maturity.

The risk assessment for MCP server deployment should focus on the potential consequences of system failures or security vulnerabilities. Low-risk scenarios, such as accessing documentation or providing read-only information, represent appropriate early adoption opportunities. High-risk scenarios like financial transactions or system modifications should wait for a more mature implementation with robust security and permission frameworks.

Organisations considering MCP server adoption should focus on building internal expertise during this experimental phase rather than attempting large-scale deployments. Understanding how MCP servers work now provides valuable preparation for leveraging the technology effectively once it reaches production readiness. This learning investment enables organisations to respond quickly when mature MCP solutions become available.

Popular MCP servers and use cases

Several MCP server implementations have gained traction in development communities, providing practical examples of how the technology can enhance productivity. The MCP server’s file system enables AI assistants to access local computers for information retrieval and management, creating a bridge between conversational interfaces and traditional file operations.

GitHub’s MCP server represents one of the most compelling use cases for improving developer productivity. Rather than manually navigating to GitHub interfaces to check build statuses, analyse repository history or review deployment pipelines, developers can query this information through natural language conversations with their AI assistants. This integration eliminates context switching, while providing access to comprehensive repository information.

ServiceNow’s MCP server addresses enterprise workflow challenges that consume significant developer time. Creating approval requests for production deployments often involves filling out complex forms with dozens of fields, which can take hours for each deployment. An MCP-enabled AI assistant can automate much of this form completion by automatically accessing code references, testing reports and compliance documentation.

The Context.dev MCP server demonstrates how external documentation can be integrated into development workflows without overwhelming AI assistants with excessive information. Rather than attempting to load all available documentation through retrieval-augmented generation approaches, developers can selectively access specific documentation versions and language references as needed during their work.

These examples illustrate MCP’s strength in eliminating routine friction from knowledge work rather than automating complex decision-making processes. The technology excels at information retrieval, status checking and form completion tasks, interrupting productive work without adding significant value.

Future opportunities and strategic considerations

The opportunity landscape for MCP servers splits along technical and business dimensions, with different implications for organisations depending on their capabilities and objectives. Technical organisations can benefit immediately from reduced onboarding complexity and improved development environment integration, while business-focused organisations should focus on understanding the technology’s trajectory for future strategic positioning.

Technical teams can leverage MCP servers to create application stores for development tools, enabling one-click installation and configuration of integrations that previously required custom engineering work. This marketplace approach could significantly reduce the technical barriers that prevent many organisations from adopting AI-enhanced development workflows, while creating new revenue opportunities for tool creators.

The onboarding benefits become particularly compelling in enterprise environments where new developers typically spend weeks learning internal tools and processes. MCP servers can effectively proxy the knowledge of experienced team members, providing new developers with guided access to company systems without requiring extensive individual training or broad permission grants.

For business leaders, the strategic consideration involves appropriately timing market entry. The current experimental phase provides an opportunity to build understanding and identify potential applications, but significant investment should wait for protocol standardisation. Organisations that develop MCP expertise during this learning period will be positioned to move quickly when mature solutions become available.

The ecosystem effects of Model Context Protocol adoption suggest that early standard-setters may gain significant advantages as the technology matures. Organisations contributing to MCP server development or establishing expertise in specific domains could benefit from network effects as the protocol gains broader adoption. However, this potential upside must be balanced against the current implementation risks and uncertainty about final protocol specifications.

Understanding MCP servers as enablers rather than solutions helps frame appropriate expectations and investment decisions. Like the computer mouse enabling graphical user interfaces, MCP servers provide the infrastructure that makes AI-assistant tools integration practical at scale.

Organisations that recognise this enabling function can position themselves advantageously for productivity improvements, which will become possible when AI assistants can seamlessly access the tools and information that knowledge workers need to be effective.

More

Ideas

our thoughts

Why software teams are shrinking to three people

Posted by Gareth Evans . Feb 23.26

Software teams are shrinking. Not because organisations are cutting headcount for its own sake but because AI agents are absorbing work that used to require five, seven or ten people to negotiate interfaces and split stories. The emerging unit is three humans and a constellation of agents.

> Readour thoughts

Data and analytics: are you asking the right questions?

Posted by Brian Lambert . Feb 09.26

The conversation around Artificial Intelligence in enterprise settings often focuses on the technology itself – the models, capabilities, impressive demonstrations. However, the real question organisations should be asking is not whether AI is powerful, but whether they are prepared to harness that power effectively.

> Readour thoughts

The future of voice intelligence

Posted by Sam Ziegler . Feb 01.26

The intersection of Artificial Intelligence and voice technology has opened fascinating possibilities for understanding human characteristics through audio alone. When we hear someone speak on the phone, we naturally form impressions about their age, gender and other attributes based solely on their voice.

> Readour thoughts

Is AI the golden age for technologists?

Posted by The HYPR Team . Oct 27.25

The technology landscape is experiencing a profound change that extends beyond the capabilities of Artificial Intelligence tools. We’re witnessing a fundamental shift in how people engage with technology, build software and think about their careers in the digital space. This shift raises a compelling question... Are we entering a golden age for technologists?

> Readour thoughts

The IT fossil record: layers of architectural evolution

Posted by The HYPR Team . Oct 20.25

The metaphor of an IT fossil record captures something interesting about how architectural thinking has evolved over decades. Like geological strata, each architectural era has left its mark, with some layers proving more durable than others. The question remains whether we’ve reached bedrock or continue to build on shifting sands.

> Read