OUR THOUGHTSTechnology

The IT fossil record: layers of architectural evolution

Posted by The HYPR Team, Col Perks . Oct 20.25

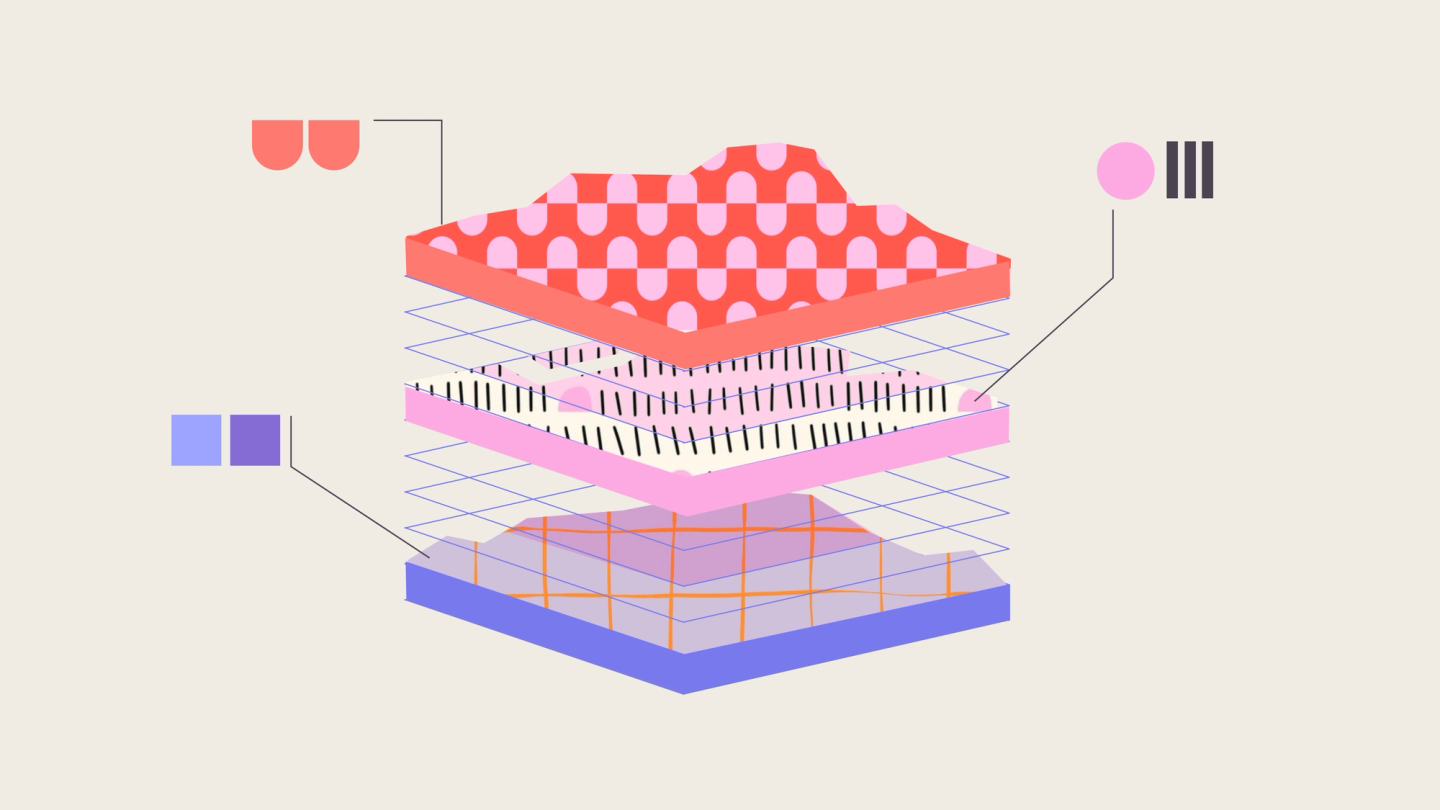

The metaphor of an IT fossil record captures something interesting about how architectural thinking has evolved over decades. Like geological strata, each architectural era has left its mark, with some layers proving more durable than others. The question remains whether we’ve reached bedrock or continue to build on shifting sands.

The 1990s represented the zenith of architectural framework thinking. Organisations invested heavily in comprehensive methods like TOGAF, treating certification as the hallmark of architectural competence.

These frameworks promised quality through completeness, offering hundreds of artefacts and detailed procedures for every conceivable situation. The instrumental rationality was seductive: follow the framework, achieve architectural excellence.

This framework-centric approach saw architecture separated from delivery as an upfront activity. The waterfall process, where large batches of work move from one activity state to the next, dictated functional specialisation in teams and long planning and development cycles.

Enterprise architecture provided the mechanism for upfront design and out-of-cycle gap analysis. However, the emergence of agile methods, cloud computing and the need for faster iteration cycles exposed the limitations of this approach.

The shift wasn’t merely technological but philosophical. Where frameworks assumed predictable, controllable development processes, reality demonstrated the power of emergent design and continuous feedback. The distributed systems revolution made traditional architectural planning insufficient for managing the complexity of interconnected services and data flows, let alone the emergent properties of large socio-technical systems.

Domain-Driven Design’s enduring relevance

Among the architectural concepts that survived this transition, Eric Evans’ Domain-Driven Design stands as remarkably prescient. Published in 2003, before the cloud era and microservices revolution, DDD’s strategic patterns continue to provide valuable guidance for organising both code and services. The concept of bounded contexts translates elegantly from monolithic applications to distributed architectures.

DDD’s persistence stems from its focus on the fundamental challenge of managing complexity through clear boundaries and ubiquitous language. These concerns transcend specific technologies or scale, being almost fractal in nature. Whether organising classes within an application or services within a platform, the principles of concern separation and contextual clarity remain relevant.

The evolution from DDD to microservices to data mesh represents a consistent thread of thinking about how to organise systems around business capabilities rather than technical specialisation. Each iteration applies similar principles at different scales and with different technologies, but the underlying insight about the importance of business-aligned boundaries persists.

The data mesh revolution

Data mesh principles challenge the fundamental assumptions of traditional analytical architectures. The conventional approach of extracting data from operational systems into centralised analytical platforms treats databases as the source of truth, ignoring the semantic context embedded in the applications that generate the data.

Zhamak Dehghani’s insight was to apply the successful patterns of operational architecture to the analytical domain. Instead of monolithic data warehouses, data mesh proposes a distributed architecture of data products, each owned by the team closest to the business context. This approach preserves the knowledge and semantics that get lost in traditional extract-transform-load processes.

The data product concept represents a fundamental shift in thinking about analytical systems. Rather than optimising for centralised control and consistency, data mesh prioritises autonomy, domain expertise and semantic richness. This architectural approach aligns naturally with the distributed, API-driven world of modern software development.

Semantic technologies

The rise of Artificial Intelligence has renewed interest in semantic technologies that were previously considered academic curiosities. RDF, JSON-LD and related standards provide mechanisms for encoding meaning that goes beyond the relational model’s explicit relationships. The ability to infer knowledge rather than just store data becomes increasingly valuable as AI systems require richer context for accurate reasoning.

Knowledge graphs enable a different relationship between data and meaning. Where relational databases require explicit definition of all relationships, semantic technologies support inference and transitivity. This capability becomes particularly powerful when combined with well-established ontologies like QUDT for units of measure which provide standardised vocabularies refined over years of use.

The practical applications extend beyond theoretical knowledge representation. In domains like energy retail, standardising units of measure through semantic technologies eliminates the proliferation of inconsistent representations that plague analytical systems. The URIs that identify these concepts can be dereferenced to provide human-readable documentation, creating a bridge between machine-readable data and human understanding.

AI and architectural reflexivity

The integration of AI into software development workflows creates new opportunities for architectural thinking. Rather than replacing human judgment, AI tools handle mechanical tasks while humans operate at higher levels of abstraction. This division of labour mirrors the broader pattern of architectural evolution, where successful abstractions allow practitioners to focus on increasingly complex problems.

The concept of reflexivity, borrowed from philosophical discourse, shows how AI might transform architectural practice. Reflexivity is a self-referential relationship where an entity relates to, acts upon or examines itself. For example, social structures shape individuals who then reshape those structures through their actions. AI coding tools force explicit articulation of design decisions that were previously tacit knowledge, shaping architecture, which then shapes the prompts for AI tooling.

The limits of mechanisation

Despite the promise of AI-assisted development, fundamental questions about the nature of architectural knowledge remain. Much of what experienced architects bring to complex problems exists as background knowledge that may resist explicit articulation. The challenge of translating tacit understanding into executable instructions highlights the continued importance of human judgment in architectural decisions.

The tendency to automate artefact generation without questioning the value of those artefacts represents a failure to learn from the lessons of the framework era. Creating better tools for producing documentation that nobody reads doesn’t solve the underlying problem of misaligned incentives and unclear value propositions.

The most promising applications of AI in architectural contexts focus on specific, well-defined problems rather than attempting to automate architectural thinking itself. Natural language querying of data structures, automatic generation of interface mappings, automatic creation of test data etc provide genuine value, while preserving human agency in higher-level design decisions.

Toward emergent architecture

The evolution from framework-heavy methods to emergent, feedback-driven approaches reflects broader changes in how we understand complex systems. Rather than attempting to predict and control all aspects of system behaviour, contemporary architectural thinking emphasises adaptability, observability and resilience.

This shift doesn’t represent the abandonment of systematic thinking about architecture. Instead, it involves the selective application of proven concepts while remaining open to new approaches as contexts change. The archaeological metaphor suggests that valuable artefacts persist across eras, but their application must adapt to new circumstances.

The future of enterprise architecture likely lies in this balance between principled thinking and pragmatic adaptation. The frameworks that dominated the 1990s provide useful tools when applied selectively, but they cannot serve as comprehensive blueprints for contemporary challenges. The most effective architectural approaches will combine contextual principles and intentional architecture with emergent design, creating resilient systems that can evolve with changing forces and needs.

The IT fossil record continues to accumulate new layers, each reflecting the dominant concerns and capabilities of its era. The challenge for practising architects is distinguishing between the sedimentary accumulation of outdated methods and the bedrock principles that will guide effective system design.

The HYPR Team

HYPR is made up of a team of curious empaths with a mission that includes to teach and learn with the confidence to make a difference and create moments for others.

More

Ideas

our thoughts

Why software teams are shrinking to three people

Posted by Gareth Evans . Feb 23.26

Software teams are shrinking. Not because organisations are cutting headcount for its own sake but because AI agents are absorbing work that used to require five, seven or ten people to negotiate interfaces and split stories. The emerging unit is three humans and a constellation of agents.

> Readour thoughts

Data and analytics: are you asking the right questions?

Posted by Brian Lambert . Feb 09.26

The conversation around Artificial Intelligence in enterprise settings often focuses on the technology itself – the models, capabilities, impressive demonstrations. However, the real question organisations should be asking is not whether AI is powerful, but whether they are prepared to harness that power effectively.

> Readour thoughts

The future of voice intelligence

Posted by Sam Ziegler . Feb 01.26

The intersection of Artificial Intelligence and voice technology has opened fascinating possibilities for understanding human characteristics through audio alone. When we hear someone speak on the phone, we naturally form impressions about their age, gender and other attributes based solely on their voice.

> Readour thoughts

Is AI the golden age for technologists?

Posted by The HYPR Team . Oct 27.25

The technology landscape is experiencing a profound change that extends beyond the capabilities of Artificial Intelligence tools. We’re witnessing a fundamental shift in how people engage with technology, build software and think about their careers in the digital space. This shift raises a compelling question... Are we entering a golden age for technologists?

> Readour thoughts

OKR mythbusters: debunking common misconceptions about objectives and key results

Posted by The HYPR Team . Oct 13.25

The rise of Objectives and Key Results has been nothing short of meteoric over the past 15 years. What started as a Silicon Valley method used by a select group of tech companies has evolved into a global business framework adopted by organisations across every industry.

> Read